Create a chatbot in the console with Azure OpenAI and C#

-

.NET

Learn how to create an Azure OpenAI instance and deploy a model to it, how to integrate the Azure OpenAI SDK into your .NET application, and how to use the chat completions APIs to create a chatbot.

Change the ServiceLifetime after the service has been added to the .NET ServiceCollection

-

.NET

Learn how to change the lifetime of services already added to a service collection.

How to build a URL Shortener with C# .NET and Redis

-

.NET

Learn how to build a link shortener using C#, .NET, and Redis. You'll be using ASP.NET Core to build the URL forwarder and the System.CommandLine libraries to manage the data.

Announcing the Webhook Plugin: Validate your webhooks with the new webhook plugin for the Twilio CLI

-

Twilio

Emulate webhook requests to test your Twilio webhook applications using the new webhook plugin for the Twilio CLI.

Use XML Literals in Visual Basic .NET to generate TwiML

-

.NET

Learn how to generate TwiML instructions to respond to texts and voice calls using XML Literals in Visual Basic .NET and ASP.NET Core Minimal APIs.

How to generate TwiML using Strings in C#

-

.NET

Learn how Twilio uses webhooks and TwiML to give you control over how to respond to a call or text message. You can generate TwiML using strings in many ways, and with C# 11 you can now also use Raw String Literals.

Use Raw String Literals to generate TwiML in C# 11

-

.NET

Learn how Twilio uses webhooks and TwiML to give you control over how to respond to a call or text message. You can generate TwiML in many ways, and with C# 11 you can now also use Raw String Literals.

Use Visual Studio dev tunnels to handle Twilio Webhooks

-

.NET

Learn how to develop webhooks on your local machine using Visual Studio dev tunnels and ASP.NET Core.

How to create an ASP.NET Core Minimal API with Visual Basic .NET (there's no template)

-

.NET

Visual Basic .NET (VB) is not dead, but it's not getting the same amount of love as C# or even F#. Luckily, all that is .NET can be used by all .NET languages including VB, so you can still use ASP.NET Core and Minimal APIs with the VB language.

Find your US Representatives and Congressional Districts with SMS and ASP.NET Core

-

.NET

Learn how to create an SMS bot that looks up U.S. Congressional Districts and Representatives using the Google Civic Information API, C#, ASP.NET Core Minimal API, and Twilio SMS.

How to Bulk Email with C# and .NET: Zero to Hero

-

.NET

There are many ways to bulk email, each with their own strengths. Learn how to bulk email with C# and .NET using SendGrid.

How to test SMS and Phone Call applications with the Twilio Dev Phone

-

Twilio

Learn how to use Twilio's brand-new open-source tool, the Twilio Dev Phone, to test your SMS and phone call applications!

Provide default configuration to your .NET applications

-

.NET

Learn how to provide default options into your .NET configuration to reduce redundancy

Send Email and SMS from Google Forms using Zapier, SendGrid, and Twilio

-

Twilio

Learn how to forward Google Form data via Email and SMS using Zapier, SendGrid, and Twilio

How to better configure C# and .NET applications for SendGrid

-

.NET

Learn how to configure your .NET applications better for sending SendGrid emails

How to get the full public URL of ASP.NET Core

-

.NET

Learn how to get the public full URL of your ASP.NET Core application

How to generate absolute URLs in ASP.NET Core

-

.NET

Learn how to generate full absolute URLs in C# and ASP.NET Core web applications.

How to better configure C# and .NET applications for Twilio

-

.NET

Learn how to use multiple configuration sources, strongly-typed objects, and implement the options pattern in your .NET applications

What's new in the Twilio helper library for ASP.NET (v5.73.0 - April 2022)

-

.NET

Learn about what's new and old with the Twilio helper library for ASP.NET in version 5.73.0

How to Send SMS without a Phone Number using C# .NET and an Alphanumeric Sender ID

-

.NET

You don't always need a phone number to send SMS! Learn how to send text messages using Alphanumeric Sender IDs.

How to prevent email HTML injection in C# and .NET

-

.NET

Learn how bad actors can inject HTML into your emails in your C# .NET applications and how to mitigate it.

Don't let your users get pwned via email HTML injection

-

Web

Learn how to prevent HTML injection into your emails and protect your users from bad actors!

How to send ASP.NET Core Identity emails with Twilio SendGrid

-

.NET

Learn how to use SendGrid to send account verification and password recovery emails in ASP.NET Core

How to send Email in C# .NET using SMTP and SendGrid

-

.NET

You can use the SendGrid APIs to send emails, but you can also use SMTP with SendGrid. Learn how to send emails using SMTP, SendGrid C# .NET

Send Emails using C# .NET with Azure Functions and SendGrid Bindings

-

.NET

Learn how to send emails from Azure Functions using C# .NET and the SendGrid Bindings

Integrate ngrok into ASP.NET Core startup and automatically update your webhook URLs

-

.NET

Learn to integrate ngrok into ASP.NET Core's startup process to create secure public tunnels and automatically handle Twilio's webhooks with a single command

Integrate IndexNow with Umbraco CMS to submit content to search engines

-

Umbraco

Umbraco is very extensible for ASP.NET developers. Learn how to integrate IndexNow into the Umbraco backoffice, so you can easily submit Umbraco content URLs to search engines that they will (re)crawl.

Send Scheduled SMS with C# .NET and Twilio Programmable Messaging

-

.NET

Learn how to schedule SMS with C# .NET and Twilio Messaging's latest feature: Scheduled Messaging!

An introduction to IndexNow and why you should care

-

Web

IndexNow is still relatively new but is getting a lot of interest in the search engine community. Hopefully, you can soon submit your content once to IndexNow, and stop manually submitting those URLs to every search engine console manually. And instead of search engines having to crawl the web over and over to look for new content, the content can be delivered directly from the source, reducing the environmental impact of crawlers.

Develop webhooks locally using Cloudflared Tunnel

-

Web

Webhooks are a common way to integrate with external service, including Twilio!

Learn how to develop webhooks locally using Cloudflare Tunnels!

How to get ASP.NET Core's local server URLs

-

.NET

Learn how to access the ASP.NET Core's local server URLs in Program.cs, in controllers using Dependency Injection, and in IHostedService or BackgroundService.

How to send SMS with C# .NET and Azure Functions using Twilio Output Binding

-

Azure

Twilio posts cloud communications trends, customer stories, and tips for building scalable voice and SMS applications with Twilio's APIs.

How to send recurring emails in C# .NET using SendGrid and Quartz.NET

-

.NET

Learn how to send recurring emails with C# .NET using Quartz.NET and SendGrid's APIs

Send Emails using the SendGrid API with .NET 6 and C#

-

.NET

Learn how to send emails using the SendGrid API with a .NET 6 console application and C#

Introducing .NET Developer Niels Swimberghe

-

.NET

Meet Niels Swimberghe, the newest member of the Developer Voices team at Twilio. Niels is a .NET developer and will be producing technical content for the .NET community.

How to run Umbraco 9 as a Linux Docker container

-

Umbraco

Umbraco 9 has been built on top of .NET 5. As a result, you can now containerize your Umbraco 9 websites in Linux containers. Learn how to containerize Umbraco 9 with Docker.

Deploying Umbraco 9 to Azure App Service for Linux

-

Umbraco

Learn how to create the Azure infrastructure using the Azure CLI to host an Umbraco 9 website using Azure SQL and Azure App Service for Linux, and how to deploy your Umbraco 9 site.

Handle No-Answer Scenarios with Voicemail and Callback

-

.NET

With Twilio Programmable Voice you can ask customers to leave a message and their phone number if there's nobody available to take the call so you can give them a callback later

Thoughts and tips on moving to Umbraco 9 from Umbraco 8

-

Umbraco

.NET Core was a groundbreaking change to the .NET platform. It is blazing fast, open-source, and cross-platform across Windows, Linux, and macOS. With Umbraco 9, we finally get to enjoy all the new innovations from .NET Core. Read about my experience upgrading an Umbraco 8 website to Umbraco 9.

Get Your Head Together With Blazor’s New HeadContent and PageTitle

-

.NET

Let’s take a look at the three Blazor components .NET 6 is introducing to help you manage the head of your document—PageTitle, HeadContent, and HeadOutlet.

Collaborating on Power Apps Using Personal Environments, Power Platform CLI, Git, and Azure DevOps

-

Dynamics

It can be hard to collaborate on Power Apps with other developers. Sometimes you can overwrite each other's work or break things; however, each developer can safely make their changes by using personal environments instead of a shared development environment. They can then merge their work together into the shared environment. A developer can track changes to solutions using git and move solutions between environments using the Power Platform CLI and SolutionPackager tool.

Delaying JavaScript Execution Until HTML Elements are Present in Power Apps and Dynamics CRM

-

Dynamics

Learn how to customize forms using JavaScript in Model-Driven Power Apps forms and Dynamics CRM forms.

How to Create Dataverse Activities using Power Automate

-

Dynamics

In this Power Platform tutorial, you will set up an HTTP webhook using the "When an HTTP request is received" action. With the HTTP request JSON, you will calculate the duration between two timestamps and fetch a contact and user by finding matching phone numbers. Then, you will create a phone call and update the status of the phone call.

How to deploy Power Apps Portals using Azure Pipelines

-

Dynamics

Learn how to automatically deploy Power Apps portals using the Microsoft Power Platform CLI and Azure DevOps.

How to deploy Power Apps Solutions using Azure Pipelines

-

Dynamics

Learn how to automatically deploy Power Apps solutions using the Power Platform Build Tools and Azure DevOps.

Generic Linear Search/Sequential Search for a sequence in C# .NET

-

.NET

To practice algorithms and data structures, I reimplemented Linear Search/Sequential Search for a sequence using C#'s generic type parameters.

Generic Boyer–Moore–Horspool algorithm in C# .NET

-

.NET

To practice algorithms and data structures, I reimplemented Boyer–Moore–Horspool algorithm for a sequence using C#'s generic type parameters.

Generic Binary Search in C# .NET

-

.NET

To practice algorithms and data structures, I reimplemented binary search using C#'s generic type parameters.

Generic Insertion Sort in C# .NET

-

.NET

To practice algorithms and data structures, I reimplemented insertion sort using C#'s generic type parameters.

Generic Quick Sort in C# .NET

-

.NET

To practice algorithms and data structures, I reimplemented Quick Sort using C#'s generic type parameters.

Generic Merge Sort in C# .NET

-

.NET

To practice algorithms and data structures, I reimplemented merge sort using C#'s generic type parameters.

Generic Bubble Sort in C# .NET

-

.NET

To practice algorithms and data structures, I reimplemented bubble sort using C#'s generic type parameters.

Proxy your phone number with Twilio Programmable Voice and Twilio Functions

-

Twilio

You'll learn how to turn a Twilio phone number into a proxy phone number using Twilio Functions as webhooks for your Twilio phone number.

How to create a Discord Bot using the .NET worker template and host it on Azure Container Instances

-

Azure

Learn how to develop a Discord bot using the .NET worker template, containerize it using Docker, push the container image to Azure Container Registry, and host it on Azure Container Instances.

Guest on .NET Docs Show: Making Phone Calls 📞 from Blazor WebAssembly with Twilio Voice

-

.NET

Earlier this week, the folks at the .NET Docs Show invited me over to talk about Twilio, .NET, and Blazor WebAssembly. We discussed different architectures, workflow diagrams, Twilio capabilities, and how to integrate them using ASP.NET WebAPI's and Blazor WebAssembly.

Download the new Azure logo as SVG, PNG, or JPEG here (high-res/transparent/white background)

-

Azure

Here's the new logo as SVG (scalable), PNG (transparent background), and JPEG at the high resolution of 3000x3000 pixels. (I copied to SVG code from the Azure Portal, modified, and exported it to PNG/JPEG)

How to bypass Captchas in Selenium UI tests

-

.NET

Captchas are often used as a way to combat spam on website forms. Unfortunately, this also makes it harder to verify the functionality of the forms using UI tests like Selenium.

To work around this you can extend your website with a bypass form.

Capture emails during development using smtp4dev and UI Test with Selenium

-

.NET

You can use smtp4dev during development to prevent emails from going out to real customers. This tool also supports IMAP which you can use to query emails for automated testing.

Create ZIP files on HTTP request without intermediate files using ASP.NET MVC Framework

-

.NET

The ZipArchive wraps any stream to read, create, and update ZIP archives. You can send the result to the client using ASP.NET MVC Framework.

How to deploy Blazor WebAssembly to DigitalOcean App Platform

-

.NET

Blazor WebAssembly can be served as static files. These files can be hosted in static hosting services such as DigitalOcean App Platform.

Don't use HttpContext.Current, especially when using async

-

.NET

Avoid using HttpContext.Current if possible, especially in asynchronous code. Use the properties provided on the Page or Controller instead and pass them on to the asynchronous code.

Create ZIP files on HTTP request without intermediate files using ASP.NET Core MVC, Razor Pages, and endpoints

-

.NET

The ZipArchive wraps any stream to read, create, and update ZIP archives. You can send the result to the client using ASP.NET MVC, Razor Pages, and endpoints.

How to deploy Blazor WebAssembly to Cloudflare Pages

-

.NET

Blazor WebAssembly can be served as static files. These files can be hosted in static hosting services such as Cloudflare Pages.

Download the right ChromeDriver version & keep it up to date on Windows/Linux/macOS using C# .NET

-

.NET

Chrome frequently updates automatically causing ChromeDriver versions to mismatch. Using C# .NET you can download the correct version of the ChromeDriver and keep it up-to-date.

Better Authentication with Twilio API Keys

-

.NET

API Keys are now the preferred way to authenticate with Twilio's API. You can create as many API Keys as you need and remove them if they are compromised or no longer used.

How to deploy Blazor WebAssembly to Heroku

-

.NET

Heroku doesn't officially offer a static hosting service but does have an experimental 'buildpack' which gives you static hosting capabilities you can use to deploy and host Blazor WebAssembly.

How to deploy Blazor WebAssembly to AWS Amplify

-

.NET

Blazor WebAssembly can be served as static files. These files can be hosted in static hosting services such as AWS Amplify.

Download the right ChromeDriver version & keep it up to date on Windows/Linux/macOS using PowerShell

-

PowerShell

Chrome frequently updates automatically causing ChromeDriver versions to mismatch. Using PowerShell you can download the correct version of the ChromeDriver and keep it up-to-date.

Use project Tye to host Blazor WASM and ASP.NET Web API on a single origin to avoid CORS

-

.NET

Using Microsoft's experimental Project Tye, you configured the proxy to forward requests to '/api' to the Web API, and all other requests to the Blazor WASM client.

Introducing online base64 image encoder

-

Web

This little tool generates the base64 data URL for the file you select without uploading it to a server.

The base64 encoding happens inside your browser.

How to deploy Blazor WebAssembly to Netlify

-

.NET

Now that you can run .NET web applications without server-side code, you can deploy these applications to various static site hosts, such as Netlify.

Run Custom Availability Tests using PowerShell and Azure Application Insights, even on-premises

-

Azure

The built-in availability tests in Azure Application Insights are great but very basic. You can create your own custom availability test. Learn how to create a custom availability test using PowerShell and Application Insight's .NET SDK.

Video: Copy HTTP Requests from Chrome/Edge DevTools to PowerShell/cURL/Fetch

-

Web

You can copy the recorded HTTP requests the from Chrome and Edge DevTools to PowerShell/cURL/Fetch. This allows you to quickly replay HTTP requests from the console/command line saving saving you time!

Video: Take screenshots using built-in commands in Chrome/Edge

-

Web

Chromium browsers such as Chrome and Edge have many lesser known features. One of those features is the ability to take screenshots of your current tab content.

Use YARP to host client and API server on a single origin to avoid CORS

-

.NET

Using Microsoft's new reverse proxy "YARP", you configured the proxy to forward requests to '/api' to the Web API, and all other requests to the Blazor WASM client.

Video: How to deploy Blazor WebAssembly to Firebase Hosting

-

.NET

With ASP.NET Blazor WebAssembly you can create .NET applications that run completely inside of the browser. The output of a Blazor WASM project are all static files. You can deploy these applications to various static site hosts like Firebase Hosting.

Introducing Online GZIP de/compressor, built with Blazor WebAssembly

-

.NET

Using Blazor WASM and the GZIP API's I created this little web application which you can use to compress and decompress multiple files using GZIP.

Pre-render Blazor WebAssembly at build time to optimize for search engines

-

.NET

Using pre-rendering tools like react-snap, you can pre-render Blazor WASM. Additionally, you can integrate these pre-rendering tools inside of your continuous integration and continuous deployment pipelines.

Fix Blazor WebAssembly PWA integrity checks

-

.NET

The service-worker-assets.js file is generated during publish and any modification made to the listed files after publish will cause the integrity check to fail.

How to deploy Blazor WebAssembly to Firebase Hosting

-

.NET

With ASP.NET Blazor WebAssembly you can create .NET applications that run completely inside of the browser. The output of a Blazor WASM project are all static files. You can deploy these applications to various static site hosts like Firebase Hosting.

Video: How to deploy ASP.NET Blazor WebAssembly to GitHub Pages

-

.NET

With ASP.NET Blazor WebAssembly you can create .NET applications that run completely inside of the browser. The output of a Blazor WASM project are all static files. You can deploy these applications to various static site hosts like GitHub Pages.

Interacting with JavaScript Objects using the new IJSObjectReference in Blazor

-

.NET

A new type is introduced in .NET 5 called IJSObjectReference. This type holds a reference to a JavaScript object and can be used to invoke functions available on that JavaScript object.

Harden Anti-Forgery Tokens with IAntiforgeryAdditionalDataProvider in ASP.NET Core

-

.NET

Using IAntiforgeryAdditionalDataProvider you can harden ASP.NET Core's anti-forgery token feature by adding additional data and validating the additional data.

Don't forget to provide image alt meta data for open graph and twitter cards social sharing

-

Web

Due to the lack of support historically and it not being prominent in many samples, the important alternative text meta tag is often forgotten. To add support for the alt-meta tag use og:image:alt and twitter:image:alt.

Rethrowing your exceptions wrong in .NET could erase your stacktrace

-

.NET

You may be erasing your stacktrace if you are catching and rethrowing exceptions the wrong way. This could make debugging a nightmare because you don't know where the exception was originally thrown.

Making Phone Calls from Blazor WebAssembly with Twilio Voice

-

.NET

Using Twilio Voice you can add the ability to make and receive phone calls from your own ASP.NET web applications. Twilio’s helper library for JavaScript makes it easy to integrate client functionality into web front ends built with Blazor WebAssembly, and the Twilio NuGet packages provide you with convenient interfaces to Twilio’s APIs for server-side tasks.

Verify what your webpage looks like in Google, Facebook, Twitter, LinkedIn, and more

-

Web

Social media platforms each generate link previews/cards differently. You can use the official debuggers/inspectors provided by Facebook, Twitter, LinkedIn to debug the previews. To quickly preview multiple social networks + Google + Slack, you can use metatags.io.

Querying top requested URL's using Kusto and Log Analytics in Azure Application Insights

-

Azure

One query many webmasters and content editors are interested in is which URL's are most popular.

Azure Application Insights can answer this question with data coming from back-end telemetry and can include request telemetry of static files as well.

ConvertFrom-SecureString : A parameter cannot be found that matches parameter name 'AsPlainText'

-

PowerShell

If you're using older versions of PowerShell, you do not have the 'AsPlainText' flag available to you. You're not out of luck though, you can quickly create your own function which will give you the same result.

Communicating between .NET and JavaScript in Blazor with in-browser samples

-

.NET

The success of Blazor relies heavily upon how well it can integrate with the existing rich JavaScript ecosystem. The way Blazor allows you to integrate, is by enabling you to call JavaScript functions from Blazor and .NET functions from JavaScript also referred to as 'JavaScript interoperability'.

Querying 404's using Kusto and Log Analytics in Azure Application Insights

-

Azure

Using the built-in Log Analytics workspace in Azure Application Insights, you can query all the URL's causing 404 HTTP Status responses.

Configure CORS using AppSettings or Custom Configuration Sections in ASP.NET Web API

-

.NET

Microsoft's CORS library works, but the attributes force you to hardcode the CORS headers. By creating custom CORS attributes, you can read the CORS configuration from AppSettings or a custom config section.

Configure ServicePointManager.SecurityProtocol through AppSettings

-

.NET

Usually .NET automatically finds a security protocol in common, but sometimes you have to update ServicePointManager.SecurityProtocol explicitly.

Real-time applications with Blazor Server and Firestore

-

DotNet

Blazor Server is built on SignalR, which is built on websockets. Among things, websockets enable Blazor Server to push changes from the server to the browser at any time. You can build real-time UI's when you combine this with a real-time database.

Pushing UI changes from Blazor Server to browser on server raised events

-

.NET

Blazor Server is built on SignalR, and SignalR is built on websockets among other techniques. The combination of these technologies allow Blazor Server to push UI changes into the client without the client requesting those changes.

PowerShell Script: Scan documentation for broken links

-

PowerShell

A lot of documentation will link to other locations on the web using URL's. Unfortunately, many URL's change over time. Additionally, it's easy to make typos or fat finger resulting in incorrect URL's.

Here's a small PowerShell script you can run on your documentation repositories and will tell you which URL's are not resolving in a proper redirect or HTTP StatusCode 200

Changing Serilog Minimum level without application restart on .NET Framework and Core

-

.NET

There are many ways to configure Serilog. The configuration library has the additional advantage that it supports dynamic reloading of the MinimumLevel and LevelSwitches.

Using ConfigurationProviders from Microsoft.Extensions.Configuration on .NET Framework

-

.NET

.NET Core introduced new API's. Some of those libraries are built to support multiple .NET platforms including .NET Framework. So, if you're still using .NET Framework, you could also take advantage of these new libraries.

Introducing Umbraco's KeepAlive Ping configuration

-

Umbraco

Umbraco 8.6 introduces the new keepAlive configuration inside of `umbracoSettings.config` which allows you to change "keepAlivePingUrl" and "disableKeepAliveTask"

Capturing ASP.NET Framework RawUrl with Azure Application Insights

-

Azure

By default, Application Insights will capture a lot of data about your ASP.NET applications including HTTP Requests made to your website. Unfortunately, the URL captured by Application Insights doesn't always match the URL originally requested by the client.

Capturing ASP.NET Core original URL with Azure Application Insights

-

Azure

By default, Application Insights will capture a lot of data about your ASP.NET applications including HTTP Requests made to your website. Unfortunately, the URL captured by Application Insights doesn't always match the URL originally requested by the client.

How to deploy ASP.NET Blazor WebAssembly to GitHub Pages

-

.NET

With ASP.NET Blazor WebAssembly you can create .NET applications that run completely inside of the browser. The output of a Blazor WASM project are all static files. You can deploy these applications to various static site hosts like GitHub Pages.

How to deploy Blazor WASM & Azure Functions to Azure Static Web Apps using GitHub

-

.NET

With ASP.NET Blazor WebAssembly you can create .NET applications that run inside of the browser . The output of a Blazor WASM project are all static files. You can deploy these applications to static site hosts, such as Azure Static Web Apps and GitHub Pages.

How to run code after Blazor component has rendered

-

.NET

Blazor components render their template whenever state has changed and sometimes you need to invoke some code after rendering has completed. This blog post will show you how to run code after your Blazor component has rendered, on every render or as needed.

Checking out NDepend: a static code analysis tool for .NET

-

.NET

NDepend is a static code analysis tool (SAST) for .NET. NDepend will analyze your code for code smells, best practices, complexity, dead code, naming conventions, and much more.

Hidden Gem: Take screenshots using built-in commands in Chrome/Edge

-

Web

Chromium browsers such as Chrome and Edge have many lesser known features. One of those features is the ability to take screenshots of your current tab content. These commands are even more powerful when you emulate different devices and resolutions.

How to add Hangfire to DNN

-

.NET

DNN already has an excellent built-in scheduler you can use to schedule tasks. But you may be more familiar with and prefer Hangfire for running background jobs. These instruction will walk you through configuring Hangfire in with DNN.

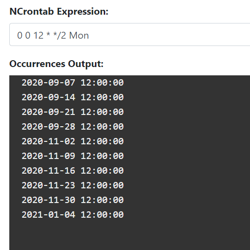

Introducing NCrontab Tester (Blazor WebAssembly)

-

.NET

Introducing NCrontab Expression tester, made using NCrontab .NET library, Blazor WebAssembly, and hosted on Azure Static Web Apps

Copy HTTP Requests from Chrome/Edge DevTools to PowerShell/cURL/Fetch

-

Web

You can copy the recorded HTTP requests the from Chrome and Edge DevTools to PowerShell/cURL/Fetch. This allows you to quickly replay HTTP requests from the console/command line saving saving you time!

Download .NET Windows Theme based on the new .NET Brand GitHub repo

-

.NET

The .NET GitHub org has a new Brand repository containing a detailed Brand guidelines PDF, logo's, illustrations, and wallpapers. Using these resources I put together a Windows Theme for .NET which you can download here!

Prey: a game out of this world (5⭐)

-

Game

Prey is a first-person thriller/horror shooter situated in a massive space station orbiting the moon. Aliens have infested the station and it's up to you to make sure the aliens don't reach Earth. The game provides a large variety of game mechanics like crafting, collecting, inventory management, sneaking, platforming, puzzling, and satisfying combat.

Use PowerShell to communicate with Dynamics CRM using the .NET XRM SDK

-

Dynamics

If you've developed client applications or plugins for Dynamics CRM before, you are familiar with the CRM/XRM DLL's. You may have gotten those DLL's from the CRM installation, the SDK zip, or the NuGet package. Another way to interact with the CRM DLL's is through PowerShell. PowerShell is built upon .NET meaning you can call exactly the same CRM libraries from PowerShell as from .NET applications.

PowerShell snippet: Get optionset value/labels from Dynamics CRM Entity/Attribute

-

Dynamics

Instead of having to use the CRM interface to copy all the labels and values manually, you can save yourself a lot of time using this PowerShell script.

Using the following script file named "GetOptionSet.ps1", you can list all the value/label pairs for a given Entity + OptionSet-Attribute:

How to run ASP.NET Core Web Application as a service on Linux without reverse proxy, no NGINX or Apache

-

.NET

This article walks us through running a ASP.NET Core web application on Linux (RHEL) using systemd. The end goal is to serve ASP.NET Core directly via the built-in Kestrel webserver over port 80/443.

How to run a .NET Core console app as a service using Systemd on Linux (RHEL)

-

.NET

Let's learn how to run a .NET Core console application on systemd. After running a console app as a service, we'll upgrade to using the dotnet core worker service template designed for long running services/daemons. Lastly, we'll add the systemd package integration.

Implementing Responsive Images in Umbraco

-

Umbraco

The web platform has responsive image capabilities such as the srcset-attribute, sizes-attribute, and the picture-element. These capabilities may seem daunting sometimes. We'll learn how to make them available and maintainable to Umbraco content editors.

VS Code C# Extension not working in Remote SSH mode on Red Hat Linux? Here's a workaround

-

.NET

Unfortunately, the C# Extension for VS Code malfunctions when following the official steps to install .NET Core on Red Hat and using the Remote SSH extension. OmniSharp is not able to find the .NET Core installation but we can work around this.

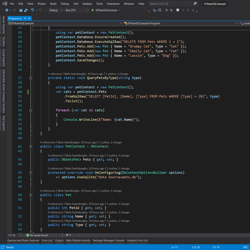

Querying data using raw SQL & Stored Procedures in Entity Framework Core

-

.NET

When LINQ queries can't meet the performance requirements of your application, you can leverage Raw SQL and still have EF map the data to concrete .NET objects. You can also invoke Stored Procedures in case the logic of the SQL queries need to reside in the SQL Database.

Auto generate Heading Anchors using HTML AgilityPack DOM Manipulation

-

.NET

Manually adding an anchor to every heading would be a painful solution. So let's learn how we can achieve this by generating the Heading Anchors using the HTML AgilityPack .NET library.

How to run .NET Core Selenium UI tests on Azure DevOps Pipelines Windows/Ubuntu agents? 🚀

-

.NET

his post discusses how to take those UI tests and run them in Azure DevOps Pipelines on both Windows & Ubuntu agents. Use this GitHub repository which contains the UI test project necessary to follow along in this post.

How to UI test using Selenium and .NET Core on Windows, Ubuntu, and MacOS

-

.NET

Selenium is a browser 🌐 automation tool mainly used for UI testing and automating tasks. Selenium is agnostic of operating system, programming language, and browser.

You can automate Chrome on MacOS using C#, FireFox using Python on Windows, or Opera using NodeJS on Linux to give you a few examples.

Deleting old web app logs using Azure Web Jobs and PowerShell

-

Azure

When you're Azure App Service writes a lot of logs, these logs can quickly pile up and even hit your "File system storage" quota limits.

This was something I personally didn't pay attention to for quite some time and was surprised to find multiple gigabytes of logs sitting in my app service.

To solve this issue, you can use a PowerShell script and a time triggered Azure Web Job.

Bulk add IP Access Restrictions to Azure App Service using Az PowerShell

-

Azure

Azure App Services are publicly accessible via Azure's public DNS in the format of "[NAME].azurewebsites.net", but there are many reasons for not wanting it to be accessible via the DNS. This script uses the Az PowerShell module to bulk add IP Ranges into the Access Restriction feature in App Service.

Bulk add Cloudflare's IPs to Azure App Service Access Restrictions using Az PowerShell

-

Azure

Azure App Services are publicly accessible via Azure's public DNS, but when using Cloudflare you should lock this down to only allow Cloudflare to reach your service. This script will add all Cloudflare's IP ranges to your app service Access Restriction.

Bulk add Application Insights Availability Test IPs to Azure App Service Access Restrictions using Az PowerShell

-

Azure

Azure App Services are publicly accessible via Azure's public DNS, but using Access Restrictions you can lock this down. To ensure your App Insight Availability Tests still work, you can use this PowerShell script to bulk insert all the IP ranges.

Clearing Cloudflare cache using PowerShell in Azure DevOps Pipelines

-

Azure

Learn how to purge Cloudflare's cache as part of your Continuous Deployment. This post will walk you through creating a PowerShell task that interacts with Cloudflare's API to clear the cache. This task will run as part of an Azure DevOps Pipelines.

Social Sharing Buttons with zero JavaScript to Twitter, Reddit, LinkedIn, and Facebook

-

Web

Instead of slowing down our site and feeding advertisement profiles, we can use plain HTML to provide social sharing functionality. This post will cover social sharing to Twitter, Reddit, LinkedIn, and Facebook.

Creating a Discord Bot using .NET Core and hosting it on Azure App Services

-

Azure

Discord is an online communication platform built specifically for gaming. Using .NET Core and Azure App Service WebJobs we can host a Discord bot that can listen and respond to voice and text input.

PowerShell Snippet: Clearing Cloudflare Cache with Cloudflare's API

-

PowerShell

Cloudflare provides a GUI to purge cache, but every action you can perform using the GUI, you can also do with Cloudflare's API. You could use the API to auto purge the cache whenever you update content in your CMS of choice, or purge the cache as part of your Continuous Delivery pipeline. Using PowerShell we'll interact with Cloudflare's API and purge their cache.

Setting up Cloudflare Full Universal SSL/TLS/HTTPS with an Azure App Services

-

Azure

Using Cloudflare's Universal SSL/TLS service, we can provide our website over a safe HTTPS connection. This post walks you through setting up the SSL/TLS encrypted connection from client to Cloudflare, to your Azure Web Application using the Full (strict) option and Cloudflare's origin certificates.

PowerShell Snippet: Crawling a sitemap

-

PowerShell

Here's a PowerShell function that you can use to validate that all pages in your sitemap return a HTTP Status code 200.

You can also use it to warm up your website, or ensure your website caching is warm after a cold boot.

I personally use it as part of my Continuous Delivery pipeline to warm up my site and Cloudflare's cache.

Web performance: prevent wasteful hidden image requests (display: none)

-

Web

We often hide images using CSS with "display: none", but this doesn't actually prevent the browser from downloading these images. Using the HTML Picture element, we can serve different versions of an image depending on media queries. We can even use a data-img to prevent images from being downloaded at all and optimize our website speed.

Crawling through Umbraco with Robots

-

Umbraco

The robots.txt file’s main purpose is to tell robots (Google Bot, Bing Bot, etc.) what to index for their search engine, and also what not to. Usually you want most of your website crawled by Google, such as blog posts, product pages, etc., but most websites will have some pages/sections that shouldn’t be indexed or listed in search engines.

Ignoring Umbraco ping.aspx from Azure Application Insights

-

Umbraco

Application Performance Monitors provide you with a lot of data, but some of that data may not be relevant. Specifically, in Umbraco there is a page at \umbraco\ping.aspx that is being called frequently to keep the site alive. This is very useful to prevent the site from "dying" (?), but the data for this request isn't that relevant and could skew your statistics. Using Azure Application Insights ITelemetryProcessor, we can prevent ping request from being sent to Azure Application Insights.

Crawling through Umbraco with Sitemaps

-

Umbraco

Websites come in all shapes and sizes. Some are fast, some are beautiful, and some are a complete mess. Whether it's a high-quality site is irrelevant if people can’t find it, but search engines are here to help. Though the competition to get on first page is tough, this series will dive into some common practices to make your website crawlable.

Exploring VueJS and Firebase

-

Frontend

A while back I started experimenting with the up and coming javascript library VueJS for an internal admin dashboard. I started learning about VueJS at Laracasts where they have a great mostly free series about VueJS. I was a little skeptical about yet another JavaScript framework/library to solve our modern day SPA needs. Though VueJS felt like a breath of fresh air. It is both refreshingly new, yet still very familiar (to AngularJS or MVVM in .NET.)

Updated Ajax + SEO Guidelines

-

Web

When you build an Ajax based website and want to be SEO friendly, there are a couple of techniques you have to apply. Back in 2009, Google made a proposal on how to make your Ajax pages crawlable.